The AI Development Workflow That Changed How We Build Software

January 19, 2026

January 19, 2026

I recall a time at Radware in 2017 when we spent six months developing a management console for their AppWall product. It involved a whole team, thousands of commits, and ultimately led to a successful launch, despite two other teams having previously failed.

Today, at PolarGrid, we shipped our first production management console in just over a week. No design team needed. AI created a superior design. A single competent engineer, proficient with LLMs, handled the entire delivery. We iterated on feedback and shipped.

But what about quality?

“Our CI has teeth,” proclaimed a Slack message in our #engineering channel. 573 unit tests across the management console, SDK, and edge agent. All written in a few hours by an engineer effectively using a model with access to our codebase.

Besides unit tests, how do we know our real-time edge infrastructure works for a customer? A fully functional voice agent would have been unthinkable even a year ago, taking months of development by the entire engineering team. Now, we built a fully functional voice agent test bench in a few days, essentially dogfooding our own infrastructure. AI enabled us to implement layers of testing from unit to integration to end-to-end, ensuring proper change management and quality.

This is how we ship at PolarGrid.

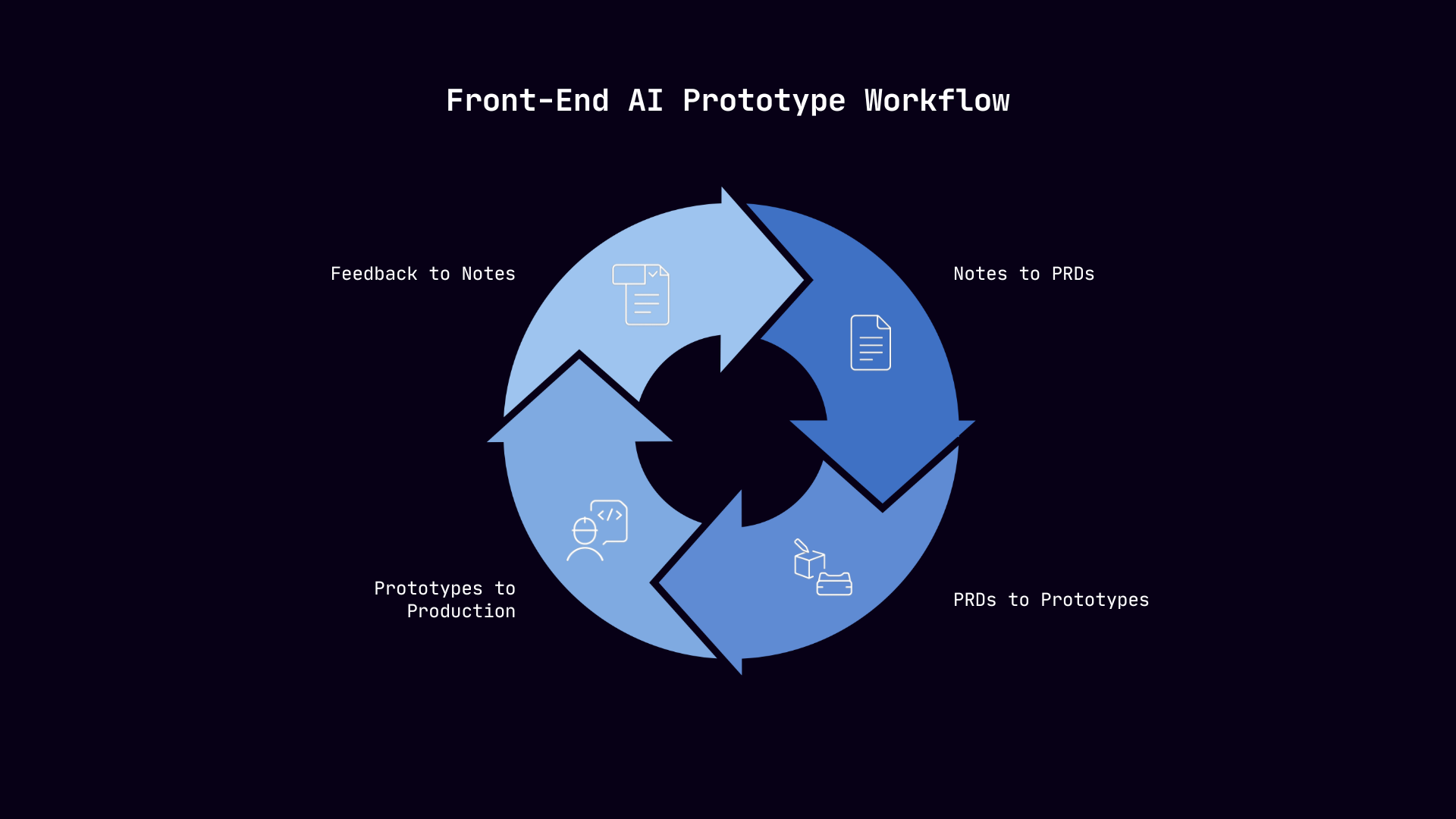

Here's our pipeline that replaced the months of work on our front end:

Notes → Product Requirement Documents (PRDs) → LLM Artifact Prototypes → Production Web Application → User Feedback → Notes

Raw notes consist of customer conversations, sketches, transcriptions, or voice memos.

While I use AI notetakers, I still pay close attention to meetings and take notes by hand because their summaries often miss nuance and key points. During this step, we are converting human thinking into structured product requirements. Then, I use AI to transform these fragments into PRDs by providing the model with context about our architecture, our constraints, and our customers. The output is a structured document with clear acceptance criteria.

A well-written PRD becomes a working prototype in minutes.

These are functional web components you can interact with, test, and break, enabling stakeholders to see UX designs immediately and give feedback. Want them to be in the style of your website? Take a screenshot of a sample and provide images as the context.

This is where inexperienced developers get stuck for weeks, sometimes forced to rebuild entire applications from scratch.

The artifact is a proof-of-concept, while production code requires state management, error handling, edge cases, API integration, and proper testing. The transition requires someone who understands both what the artifact demonstrates and has the skill set to understand production requirements. At this point, we iterate and refactor something that works on a happy path into something that scales.

We are building everything for the end user and ease of use.

Our team members and design partners provide feedback on our product, and the team then uses LLM to convert those notes into the next PRD. Thus, we keep the feedback cycle going and improve the product with each iteration.

There's a persistent myth that AI-generated code is untestable or generates technical debt.

Yet concerns that AI will produce worse software are often exaggerated and fail to consider that LLMs give us raw horsepower to build far better test coverage and iterate more rapidly than in the pre-LLM world.

At PolarGrid, AI-assisted development enabled us to produce layers of testing we wouldn't have had time to write manually:

AI enabled this. We can invest in quality because we stopped spending all our time on boilerplate.

AI generates fast, yet humans discern.

Every stage of our workflow involves iteration. The first PRD output needs refinement. The first artifact prototype reveals gaps in the requirements. The first production refactor surfaces edge cases that engineers did not consider.

Speed without iteration is just fast failure. The productivity gains come from rapid cycles: generate, evaluate, adjust, generate again. Each loop improves the solution.

Human-in-the-loop remains necessary. The model doesn't know when the output is good enough. It doesn't know when the architecture will cause problems six months from now. It doesn't know when the user experience feels off, even though it technically works.

We evaluate the LLM’s output and decide what to keep, what to change, and what to throw away entirely. This judgment comes from experience, domain understanding, and having shipped systems before.

Discernment requires humility. Showing users iterative products and listening to their feedback without defensiveness. Understanding when AI-generated code is “good enough”.

AI without human discernment produces plausible nonsense. Human productivity without AI moves too slowly. The leverage lives in the combination.

One pattern I keep seeing: developers dump code into LLMs and expect miracles. It doesn't work that way.

Our internal PRDs reference our architectural decisions, while our prompts include examples and snippets from our actual codebase.

Providing LLMs access to relevant documentation transforms results. For example, an agent struggles to perform a task if it falls outside its training dataset, such as when it encounters a new open-source package. Pointing the model at open-source documentation helps it complete the task.

Sorry to be the bearer of bad news, web developers and software outsourcers.

If you're working on vanilla, templated implementations, the value of your services is quickly decreasing. Front-end development is now firmly a domain of effective human-AI collaboration.

My inbox is full of inbound service requests offering software development help. Yet, what we need are committed creators and experts who can effectively utilize AI in their respective fields. The sun sets on outsourcing for vanilla development. And rises on the insourcing of effective human-AI collaborators.

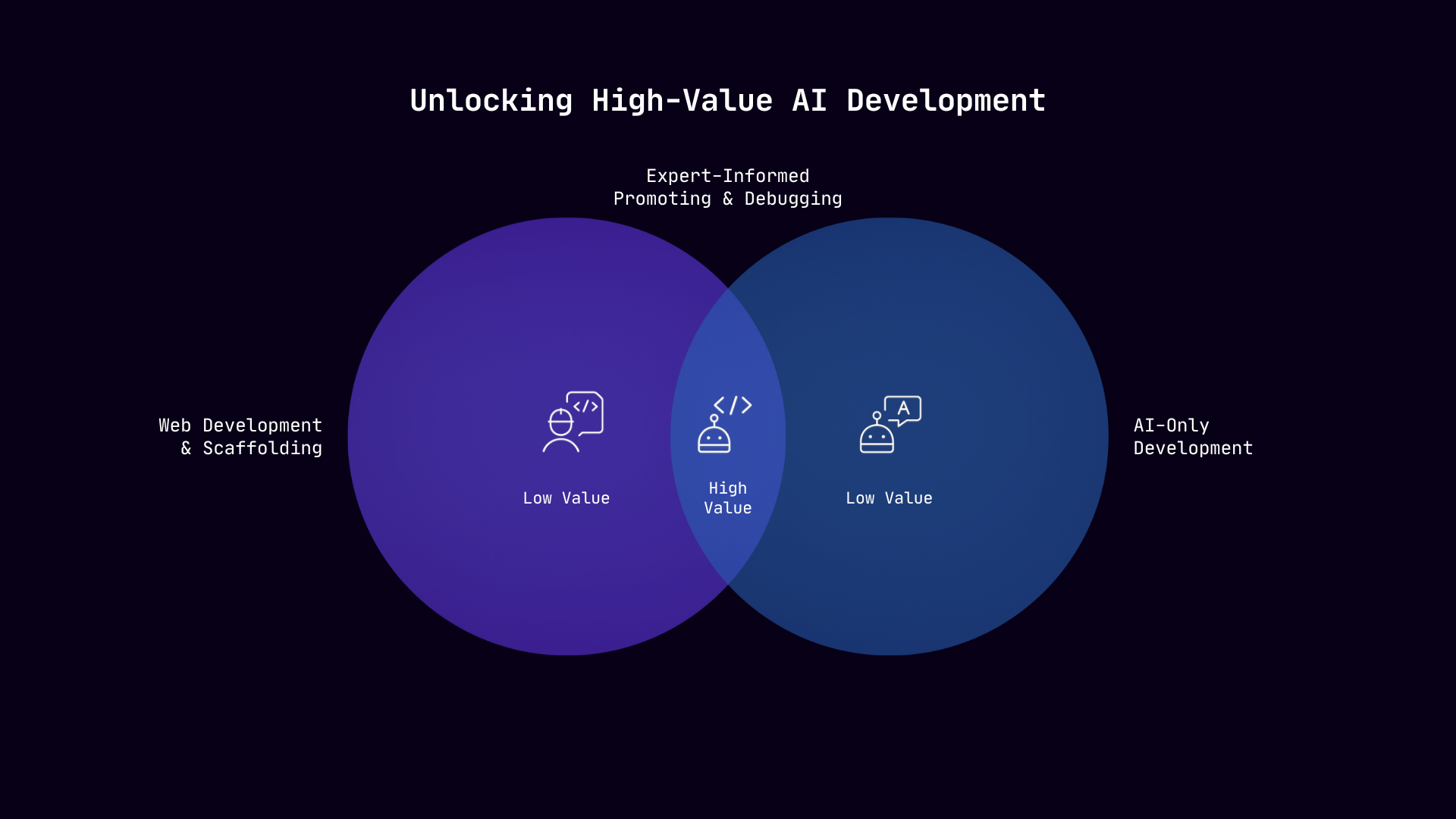

So what separates developers who thrive from those getting displaced?

Strong software architecture fundamentals combined with the ability to prompt models effectively, converting your architectural vision into workable outputs. You need to know what good looks like before you can guide an AI toward it.

When LLM workflows hit bottlenecks (and they will), you will fall back on your debugging skills. I've seen inexperienced developers stuck for weeks on problems that a senior engineer solves in an hour. The AI doesn't know it's wrong. You do.

Independent product delivery where engineers can own complete features, gather feedback, and iterate on requirements without handoffs. The old model of throwing work over the wall to the next team dies when one person with AI can do what three teams used to do.

LLMs and AI agents don’t have a face. Humans do. Showing up to meetings with customers and the team is irreplaceable and more crucial than ever. Bring your humanity forward. Chat to your team. Get their feedback. And take that collaborative intelligence back into your workflows. Improve them.

This evolution creates an interesting paradox: web developers without AI expertise offer diminishing value, while AI-only developers without fundamentals hit walls quickly.

If you're an experienced developer ignoring AI, you're leaving a 10x multiplier on the table. If you're an AI enthusiast without engineering fundamentals, you're building on sand.

The future belongs to those who synthesize both. AI accelerates everything you already know how to do, and your expertise guides AI past the places it gets stuck.

The massive value lies in the intersection of AI horsepower and human discernment - produced by those who master both.

By Sev Geraskin, Co-Founder and VP of Engineering, PolarGrid